An intriguing research has unveiled the considerable impact of slight changes to AI chatbot prompts on their overall performance. The study affirms the surprising influence of ‘positive thinking' in enhancing the way language models operate.

Remarks on the Study

Carried out by Rick Battle and Teja Gollapudi from VMware, this study was published on arXiv, a server for preliminary findings. They employed three Large Language Models and a set of 60 human-authored prompts tailored to inspire the AIs for this investigation.

Varying Prompts and their Impact

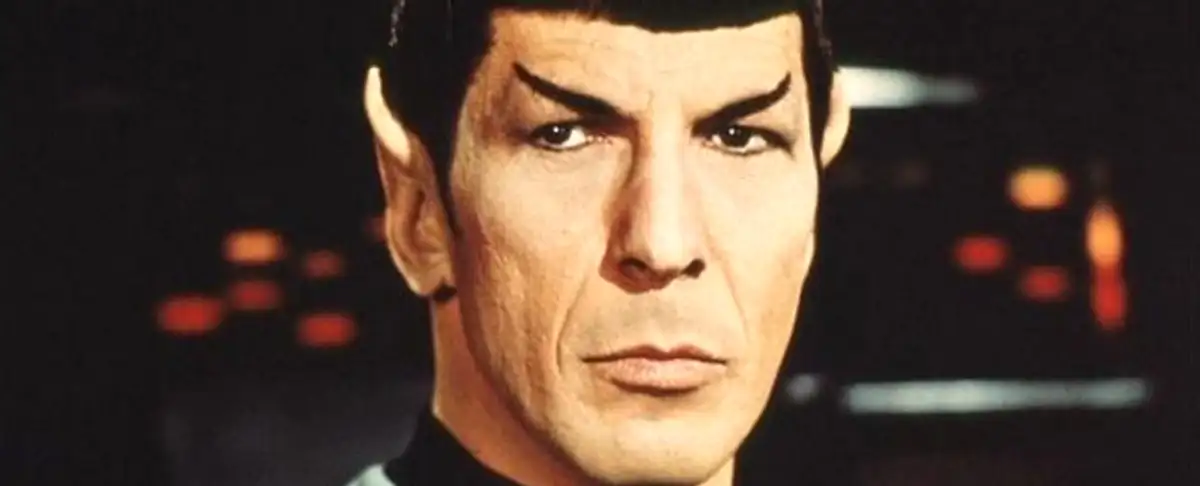

The prompts used for the study weren't monotonous and encompassed positive affirmations and requests to think meticulously. One striking discovery was finding that one of the top-performing prompts was when the AI was requested to communicate as though it was a character from Star Trek. However, these findings don't necessarily imply that instructing the AI to talk as a Starfleet commander will always yield positive results.

Chatbot Performance Evaluation

The efficacy of these AI models was evaluated based on how efficiently they solved a dataset of elementary-school-level math problems. Interestingly enough, the study concluded that automatic optimization eclipsed human efforts to uplift the AI using positive thinking.

Understanding the Influence of Prompts

Despite the effectiveness of certain prompts in improving performance, researchers are still grappling to comprehend why. These models are often referred to as “black boxes” due to the complexity and lack of understanding. Catherine Flick, another researcher, clarified that the model doesn't actually “comprehend” the prompt, but manipulates diverse sets of weights and probabilities for output acceptability.

Future Directions

Even with the ongoing enigma around these models, new research domains and courses are budding to optimize chatbot model usage. Rick Battle, in particular, discourages the use of handwritten prompts, advocating for letting the AI model generate the prompts instead.